This week in DITA we have approached the concept of semantic Web.

Even though there is no agreed definition about what Web 3.0 should be, some people consider it as an extension of the Web 2.0 while others identify it with the semantic Web.

The term Semantic Web was coined by Tim Berners-Lee and he gave this definition in the Scientific American article “The Semantic Web” in 2001: “The Semantic Web is an extension of the current web in which information is given well-defined meaning, better enabling computers and people to work in cooperation.“

In other words, the idea is to make the Web more intelligent and intuitive about how to serve a user’s needs.

Search engines have little ability to select the pages that a user really needs. The semantic Web aims to solve this problem using context-understanding programs that, through the use of self-descriptions and other techniques, can selectively find what users want. The central idea of it is that data should be not only machine readable but also machine understandable.

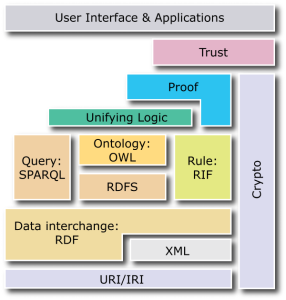

Although the semantic Web does not yet exist, the World Wide Web Consortium (W3C) has identified the technologies needed in order to achieve it and these are four:

- Web resources

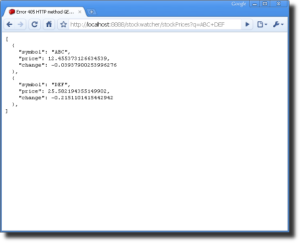

- RDF (Resource Description Framework), which is a general framework for describing metadata on the website. It is written in XML. A RDF statement is a triple which consists of subject (a resource), object (a property of the subject) and predicate (their relationship). URI’s (unique resource identifier) are used for subjects and predicates while objects can use either a URI or a literal such a number or string. Collections of RDF statements are called RDF graphs.

- RDFS (Resource Description Framework Schema), which is a language for describing taxonomies based on RDF statements.

- OWL (Web Ontology Language), which is a set of logical rules that define relationships among the things described in the taxonomy. It can be expressed as a RDF graph.

A markup language is a computer language that uses tags to define elements within a document. Two of the most popular markup languages are HTML and XML.

The Text Encoding Iniciative (TEI) is ‘an international project to develop guidelines for the preparation and interchange of electronic texts for scholarly research, and to satisfy a broad range of uses by the language industries more generally.’

It is based on XML and it requires a DTD (Document Type Definition), which defines the document structure with a list of legal elements and attributes.

It is possible to use a XML existing scheme or to customise a content model. In this last case, it is essential to create clear definitions of what tags describe and how they are going to be used.

The main difference between marked-up text and non-marked-up text is that the former can be analyzed, searched, and put into relation with other texts in a repository or corpus.

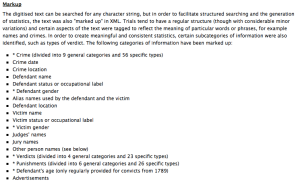

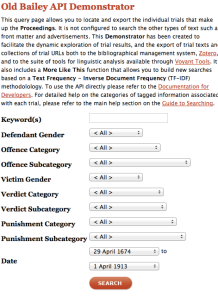

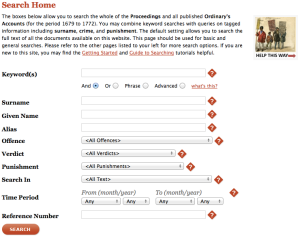

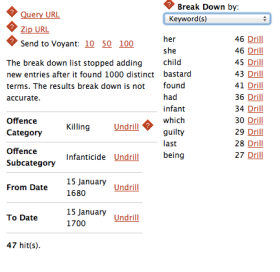

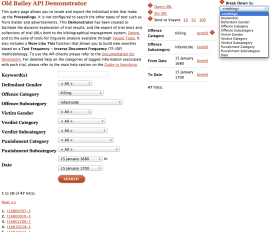

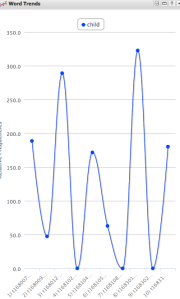

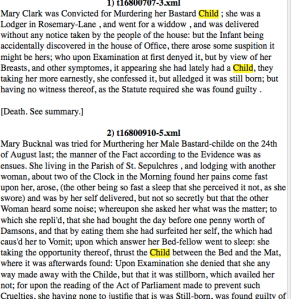

An example of a custom-built tag set is the one used by the Project Old Bailey Online. The project’s web site states clearly the categories that have been marked up:

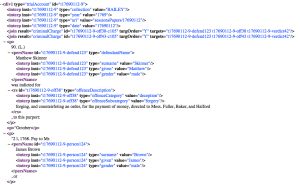

It also allows to have a XML view of the trials:

Artists Books Online is another good example of a custom-built tag set. It is an online repository of facsimiles, metadata and criticism.

Indexes are organized by title, artist, publication date and collection.

Books are represented by metadata in a three-level hierarchical category structure (work, edition and object) plus an additional level corresponding to images.

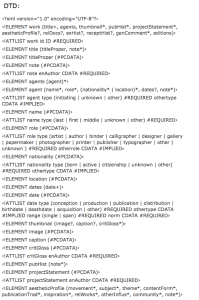

The website allows to access to the DTD, which is a file that defines the kinds of elements, attributes, and features that the data have. In this case, it is organized in the three-level structure mentioned before: work, edition, object. This basic structure is a scholarly convention from bibliographical description.

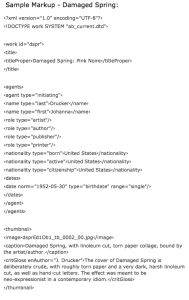

This website also provides a sample XML file of the item “Damaged Spring”, allowing us to compare it with the DTD and with the human readable version.

————————————————————————————————————————————————–

The FAQs section is helpful and gives an insight of the future plans that the website aims to fulfill.

There are many projects that encode texts using TEI. Some of them can be found in this link. It could also be used in the future to create online repositories of music, video, images and so on.

With this post, we have come to an end to our lectures in DITA. I would like to say that I have found this module very challenging and helpful at the same time, opening my mind to very interesting topics and tools, which I find extremely important in order to cope with the transformations the LIS profession has undergone lately.